The GPT-3 based language model, 123B, has grasped the attention of researchers and developers alike with its impressive capabilities. This powerful AI exhibits a astonishing ability to generate human-like text in a range of styles and formats. From crafting creative content to delivering insightful queries, 123B continues to push the thresholds of what's feasible in the field of natural language processing.

Unveiling its inner workings offers a window into the future of AI-powered communication and opens a world of potential for innovation.

A 123B: A Benchmark for Large Language Models

The 123B benchmark has become for a standard measurement of the performance of large language models. This comprehensive benchmark leverages an immense dataset containing text covering various domains, enabling researchers to assess the competence of these models in tasks such as question answering.

- This benchmark

- LLMs

Fine-Tuning 123B with Specific Tasks

Leveraging the vast potential of large language models like 123B often involves fine-tuning them for particular tasks. This process requires customizing the model's parameters to boost its performance on a designated field.

- Consider, fine-tuning 123B to text summarization would demand tweaking its weights to effectively capture the main ideas of a given passage.

- Similarly, fine-tuning 123B for information retrieval would concentrate on conditioning the model to accurately reply to queries.

Concisely, fine-tuning 123B with specific tasks unlocks its full potential and facilitates the development of effective AI applications in a varied range of domains.

Analyzing the Biases within 123B

Examining the biases inherent in large language models like 123B is vital for ensuring responsible development and deployment. These models, trained on massive datasets of text and code, can reflect societal biases present in the data, leading to discriminatory outcomes. By carefully analyzing the responses of 123B across multiple domains and scenarios, researchers can detect potential biases and address their impact. This entails a multifaceted approach, including scrutinizing the training data for preexisting biases, implementing techniques to debias the model during training, and periodically 123B monitoring its performance for signs of bias.

Unpacking the Ethical Challenges Posed by 123B

The implementation of large language models like 123B presents a minefield of ethical concerns. Touching on algorithmic bias to the risk of manipulation, it's vital that we meticulously scrutinize the impacts of these powerful technologies. Responsibility in the development and implementation of 123B is essential to ensure that it serves society rather than exacerbating existing inequalities.

- For example, the risk of 123B being used to create authentic-sounding fake news. This could weaken trust in media outlets

- Moreover, there are fears about the influence of 123B on intellectual property.

123B and the Future of AI Language Generation

123B, a massive language model, has ignited discussions about the trajectory of AI language generation. With its immense capabilities, 123B demonstrates an unprecedented ability to process and produce human-quality language. This profound development has global effects for fields such as entertainment.

- Moreover, 123B's accessible nature allows for engineers to contribute and push the boundaries of AI language generation.

- Despite this, there are concerns surrounding the moral implications of such sophisticated technology. It is important to mitigate these concerns to guarantee the positive development and deployment of AI language generation.

Ultimately, 123B represents a turning point in the progress of AI language generation. Its influence will continue to be experienced across various domains, molding the way we communicate with technology.

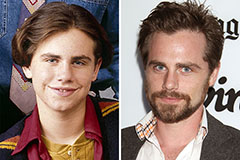

Rider Strong Then & Now!

Rider Strong Then & Now! Taran Noah Smith Then & Now!

Taran Noah Smith Then & Now! Brian Bonsall Then & Now!

Brian Bonsall Then & Now! Brandy Then & Now!

Brandy Then & Now! Jeri Ryan Then & Now!

Jeri Ryan Then & Now!